Pieter Van Schalkwyk

CEO at XMPRO

The Carroll Industrial AI Agent Framework: Evaluating True AI Agency

As we navigate the evolving landscape of artificial intelligence in 2025, the term “AI Agent” has become increasingly diluted by marketing claims and superficial implementations. Michael Carroll’s* recent analysis of “Agent Washing” highlights a critical challenge: distinguishing genuine agent capabilities from rebranded automation tools. This challenge resonates particularly strongly in industrial settings, where the stakes of AI implementation directly impact operational efficiency and safety.

Building on Michael’s mention of Arthur Kordon’s fundamental axioms for industrial AI—the ability to learn, predict, and reason—this framework provides a structured approach to evaluating AI agents. While developed with industrial applications in mind, its principles apply across sectors where autonomous decision-making and adaptive behavior are crucial.

Recent articles by Anthropic and others have established important architectural distinctions between workflows and agents that help clarify this taxonomy. Workflows represent systems where LLMs and tools follow predefined code paths in an orchestrated manner, while agents are systems where LLMs dynamically direct their own processes and tool usage, maintaining control over how they accomplish tasks.

Various frameworks have emerged to support these implementations, from developer-focused solutions like Microsoft’s AutoGen, LangGraph, and Amazon Bedrock’s AI Agent framework, to no-code platforms like XMPro’s MAGS. However, the key differentiator lies not in the implementation approach but in the system’s demonstrated capabilities—true agents can understand complex inputs, engage in reasoning and planning, use tools reliably, and recover from errors while operating independently over extended periods.

In today’s market, we see three distinct approaches:

- Traditional automation systems executing predefined rules

- AI-enhanced workflows with language interfaces

- Genuine agents capable of autonomous decision-making and learning

True industrial AI agents, as defined by both Carroll and Kordon, must demonstrate capabilities beyond simple task execution or natural language interaction. They must exhibit genuine agency—the ability to learn from experience, make autonomous decisions within defined boundaries, and adapt their strategies based on changing conditions.

The advancement of artificial intelligence demands a rigorous framework for evaluating AI systems’ capabilities as genuine agents rather than mere automated tools. Building on Michael Carroll’s foundational work and incorporating three additional requirements I’ve identified through real-world industrial projects, this framework offers systematic methods and measurable criteria for distinguishing between marketing claims and genuine capabilities, enabling better technology decisions and stronger operational outcomes across industries.

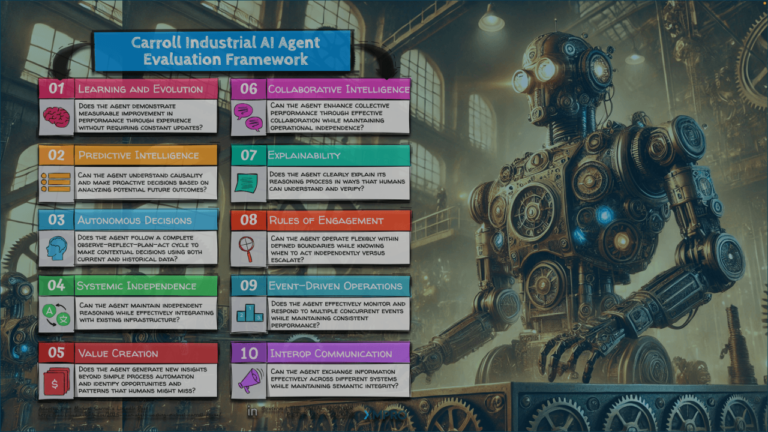

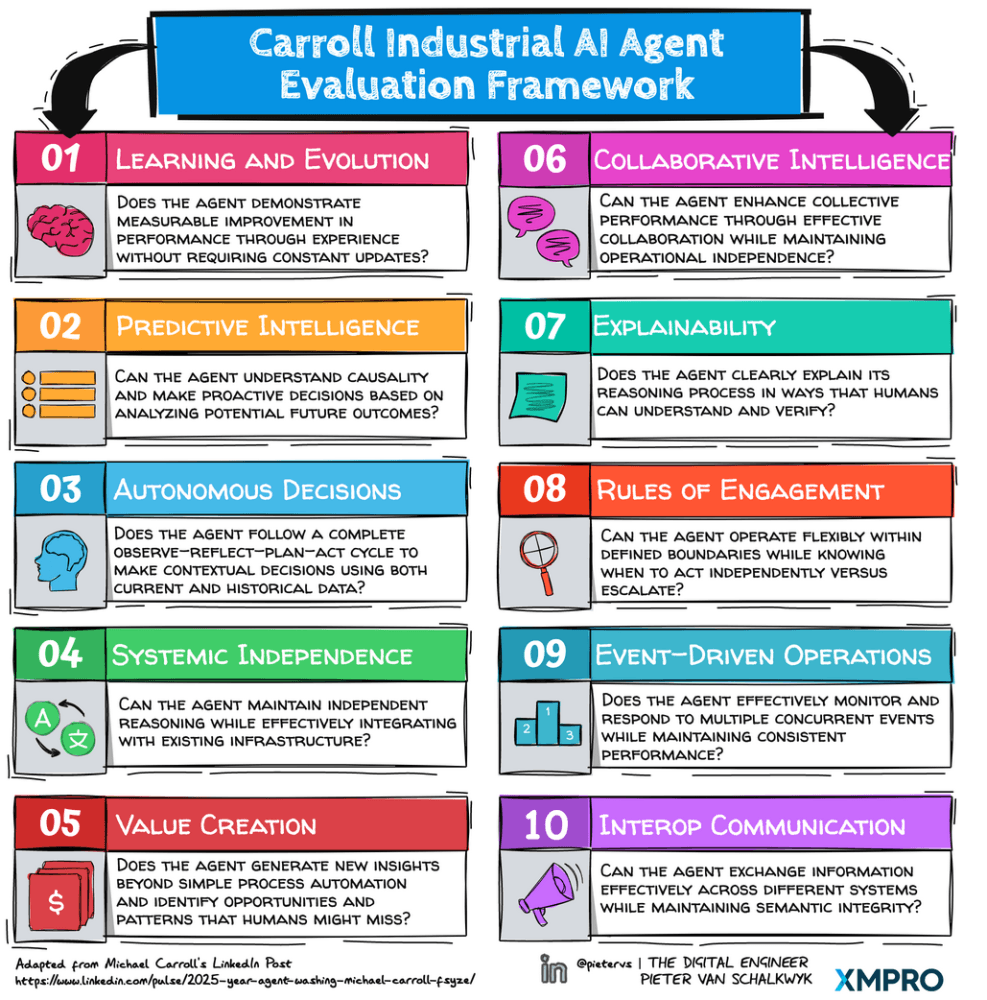

Here is what I call the “Carroll Industrial AI Agent Evaluation Framework”

Carroll Industrial AI Agent Evaluation Framework

Understanding True Agency

An AI agent demonstrates true agency when it exhibits independent, goal-directed behavior while adapting effectively to novel situations. This agency manifests through reasoned decision-making and clear articulation of cognitive processes—distinguishing it fundamentally from systems that simply execute pre-programmed responses.

The Carroll Questions for Evaluating AI Agents

1. Learning and Evolution The capacity for learning represents an agent’s ability to improve through experience without requiring constant external updates. The system should show measurable improvement in decision quality over time, adapting its approaches based on outcomes and new information. This continuous evolution demonstrates the agent’s ability to build genuine understanding rather than simply accumulating data.

2. Predictive Intelligence Understanding causality—not just correlation—defines an agent’s predictive capabilities. The system must grasp not only what happened but why it happened and what could happen next. Through reasoned analysis of potential outcomes, the agent demonstrates genuine comprehension of cause-and-effect relationships in its operational domain. This capability enables proactive decision-making rather than reactive responses.

3. Autonomous Decision-Making

Genuine autonomy emerges through a continuous cognitive cycle of observation, reflection, planning, and action—each phase building upon the previous to create meaningful decisions rather than mere responses.

The agent must demonstrate contextual awareness by systematically observing its environment and reflecting on the implications of potential actions. This reflection process incorporates both immediate operational data and accumulated experience to formulate action plans that align with defined objectives. The system’s ability to move deliberately through this cognitive cycle—rather than jumping directly from observation to action—distinguishes true agency from reactive automation.

4. Systemic Independence While integration with existing systems remains crucial, genuine agents maintain cognitive independence from these systems. They interface effectively with current infrastructure while preserving their ability to reason beyond the constraints of legacy systems. This capability allows the agent to leverage existing resources while maintaining the flexibility to adapt to changing operational requirements.

5. Value Creation An effective agent transcends simple process automation by generating novel insights and capabilities. The system identifies patterns and opportunities that human operators might miss, thereby extending rather than merely replicating human capabilities. The true measure of value creation lies in the agent’s ability to generate new insights and approaches that enhance overall operational effectiveness.

6. Collaborative Intelligence Effective agents maintain their independence while participating productively in larger systems of both human and artificial actors. This requires balancing individual agency with collective objectives—similar to a skilled professional operating within a team environment. The agent’s collaborative capability manifests through enhanced collective performance and seamless integration with existing workflows.

7. Transparency and Explainability Trust development requires agents to articulate their reasoning processes clearly. This transparency enables verification of decision paths and creates accountability in the system’s operations. The agent must provide explanations that bridge the gap between machine logic and human understanding, facilitating meaningful oversight and collaboration.

8. Rules of Engagement The agent must operate within carefully defined boundaries while maintaining flexibility to address novel situations. This framework determines not just what an agent can do, but what it should do in any given context. Through continuous awareness of operational boundaries and consistent judgment in escalation decisions, the agent demonstrates reliable adherence to its defined scope of authority.

9. Event-Driven Operations The agent must maintain continuous awareness of its operational environment, responding effectively to real-time events and changes in conditions by subscribing to real-time operations intelligence. This requires processing multiple concurrent situations while maintaining consistent performance quality. The ability to prioritize and manage multiple event streams distinguishes true agents from simple automated systems.

10. Communication Interoperability Effective agents must navigate complex communication environments, exchanging information across diverse systems and entities. This requires maintaining message integrity across different protocols while ensuring semantic understanding. The agent’s communication capabilities must support both technical compatibility and meaningful information exchange across the operational ecosystem.

Framework Application

The value of this framework emerges through systematic application to specific operational contexts. Rather than seeking perfect scores across all dimensions, organizations should use these criteria to assess fit for their particular needs and operational environment. Regular evaluation against these criteria helps track development and identify areas for improvement.

This framework provides a structured approach to evaluating AI agency, helping organizations move beyond superficial assessments to meaningful evaluation of agent capabilities. By focusing on these fundamental aspects of agency, organizations can better understand and leverage AI systems in their operations.

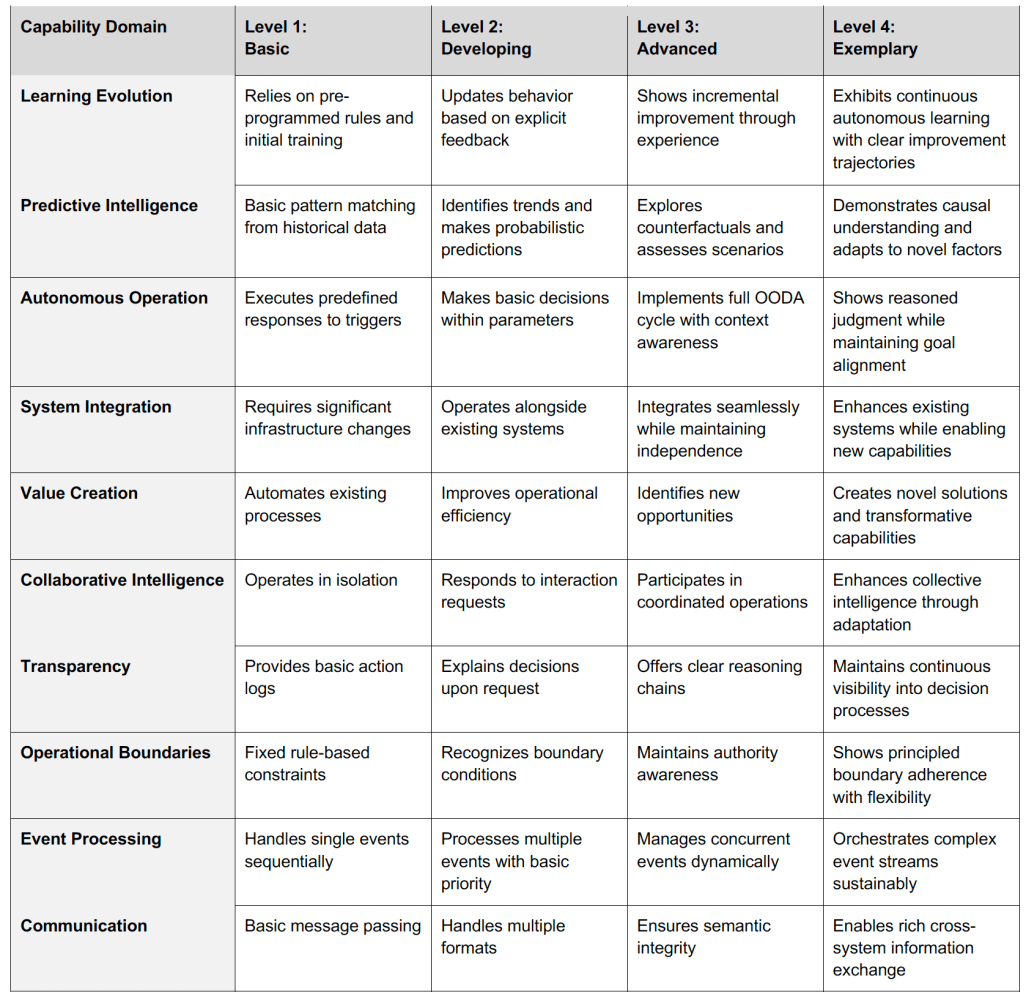

AI Agent Capability Maturity Matrix

This matrix provides a structured approach to evaluating AI agent capabilities, progressing from basic automation to true agency. Each level represents a meaningful advancement in autonomous behavior and operational sophistication.

AI Agent Capability Maturity Matrix

Assessment Guidelines

The matrix should be applied with consideration for the specific operational context and requirements. Not all systems need to achieve Level 4 in all domains—the goal is to identify the appropriate maturity level for each capability based on organizational needs.

Key Assessment Principles:

- Evaluate observable behaviors rather than technical specifications

- Consider the coherence between different capability levels

- Focus on genuine advancement rather than superficial compliance

- Maintain awareness of interdependencies between capabilities

The progression across levels should demonstrate increasing autonomy, sophistication, and operational value while maintaining alignment with organizational objectives and ethical constraints.

The Path Forward: From Evaluation to Implementation

As organizations navigate the rapidly evolving landscape of AI agents, this framework serves as both a compass and a map. The compass points toward true agency—helping organizations distinguish between marketing claims and genuine capabilities. The map provides a structured path for evaluation and implementation, ensuring that investments in AI technology deliver real operational value.

Beyond Agent Washing

The challenge of “agent washing” for industrial applications will likely intensify as more vendors rush to market with AI solutions. However, by applying this framework’s systematic evaluation approach, organizations can:

- Make informed decisions about AI agent implementations

- Set realistic expectations for agent capabilities

- Create clear development roadmaps for their AI initiatives

- Measure and validate genuine improvements in agent performance

Strategic Implementation

Success with AI agents requires more than just technical evaluation—it demands a strategic approach to implementation:

- Start with specific, well-defined operational challenges where human operators currently combine experience with real-time data

- Use the maturity matrix to benchmark current capabilities and set development targets

- Build experience through controlled implementation while maintaining realistic expectations

- Focus on measurable operational outcomes rather than technical sophistication

Looking Ahead

The true value of industrial AI agents lies not in their technical capabilities alone, but in their ability to enhance human decision-making and operational effectiveness. As these technologies mature, organizations that understand and apply these evaluation criteria will be better positioned to:

- Leverage AI agents for genuine operational improvement

- Build trust between human operators and AI systems

- Create scalable, adaptable operational environments

- Drive meaningful innovation in their industries

The journey toward true AI agency is just beginning. This framework provides a foundation for that journey, helping organizations move beyond the hype to create real value through thoughtful implementation of AI agent technologies.

The question is no longer whether AI agents will transform industry, but how organizations will ensure they implement genuine agents rather than superficial solutions. The answer lies in rigorous evaluation, strategic implementation, and a clear understanding of what constitutes true agency.

What are your thoughts, and how can we improve on this framework?

Michael Carroll* – I asked Mike for permission to use and extend his questions to create the framework, and here is his disclaimer:

“I support it as I in no way thought of it as complete or correct. It’s all a work in progress that needs discussed, refined, defined and tested, by us all. The more who work on it the faster the edge of the frontier expands”

Thank you, Mike, for kickstarting this.

Our GitHub Repo has more technical information if you are interested. You can also contact myself or Gavin Green for more information.

Read more on MAGS at The Digital Engineer

About the Author: Pieter van Schalkwyk is the CEO of XMPro, which focuses on helping organizations bridge the gap between industrial operations and enterprise systems through practical AI solutions. With over thirty years of experience in industrial automation and digital transformation, Pieter leads XMPro’s mission to make industrial operations more intelligent, efficient, and responsive to business needs.