Pieter Van Schalkwyk

CEO at XMPRO

Recent discourse around AI agent development has been energized by the paper “Fully Autonomous AI Agents Should Not be Developed” (Mitchell et al., 2025). As the principal architect of XMPro’s Multi-Agent Generative Systems (MAGS), working alongside Gavin Green, we’ve spent a lot of time addressing the fundamental challenges of autonomous agent development.

While the paper raises vital concerns, it presents a false dichotomy between full autonomy and human control. Let me explain why.

The Fundamental Challenge

First, let’s acknowledge the paper’s core insight: unbounded autonomy in complex systems inherently creates unbounded risk. This is not just true for AI—it’s a fundamental principle of complex systems theory. However, this doesn’t mean we must prohibit autonomous systems entirely. Instead, it suggests we need a more nuanced approach to autonomy itself.

Bounded Autonomy: A framework where systems operate independently within well-defined constraints, maintaining freedom of action within predetermined safe envelopes while preserving human agency over boundary conditions.

The Human Parallel

Consider how we manage human autonomy in critical systems. Nuclear power plant operators have significant autonomy in their daily operations, but this autonomy exists within strict operational boundaries defined by:

- Technical specifications

- Operating procedures

- Safety protocols

- Regulatory requirements

We don’t respond to operator risks by eliminating human autonomy—we create structured environments where autonomy can be exercised safely. This same principle can and should apply to AI agents.

A Systemic Approach to Safe Autonomy

At XMPro, we’ve developed a multi-layered approach to agent governance that addresses the paper’s concerns while maintaining practical operational autonomy. This framework rests on three fundamental pillars:

1. Computational Policies

These are not mere guidelines but executable constraints that define the “physics” of what agents can and cannot do. Think of them as the equivalent of physical safety interlocks in industrial machinery—they don’t rely on decision-making but create fundamental boundaries that cannot be violated.

2. Deontic Principles

Building on the work of moral philosophers and ethicists, we encode ethical constraints as formal logical structures. These aren’t simple if-then rules but rich frameworks that capture the nuanced relationships between obligations, permissions, and prohibitions.

3. Expert System Rules

Drawing from decades of domain expertise, we implement structured knowledge representations that guide agent behavior in specific contexts. This transforms tacit human expertise into explicit computational guardrails.

Deep Foundations in Rules of Engagement

I’ve explored these concepts of bounded autonomy and governance frameworks extensively in my recent collaboration with Dr. Zoran Milosevic, particularly in our article “Part 5 – Rules of Engagement: Establishing Governance for Multi-Agent Generative Systems. (September 4, 2024)”

There, we establish how deontic tokens—formal representations of obligations, permissions, and prohibitions—create what we call computational accountability. This framework, based on the ISO/ITU-T/IEC open distributed processing systems standard, demonstrates how autonomous systems can maintain both operational freedom and strict compliance through hierarchical rule structures.

The key insight from this work is that governance isn’t an external constraint on autonomous systems but rather a fundamental architectural component that enables their safe operation.

The Role of Human Oversight

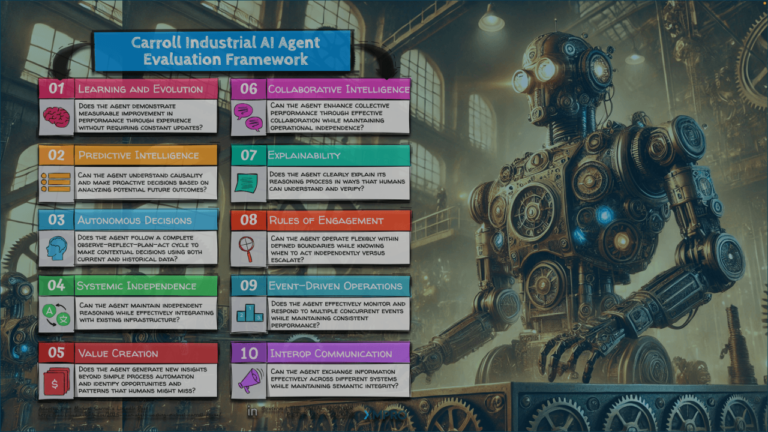

A crucial insight often missed in these discussions is that meaningful human oversight doesn’t require constant intervention. Instead, it requires:

Observability: All agent actions must be transparent and traceable, creating what we call “computational accountability.”

Intelligibility: The reasoning behind agent decisions must be explainable in human terms, enabling what we might call “semantic debugging.”

Intervenability: Humans must maintain the ability to modify or halt agent operations when necessary, creating what we term “graduated control.”

Practical Implementation

At XMPro, we’ve implemented this framework through what we call the “Rules of Engagement” system. This creates three distinct but interrelated control layers:

Operational Boundaries define the basic physics of what agents can do, implemented through immutable computational policies.

Decision Frameworks govern how agents process information and make choices, implementing what we might call “bounded rationality by design.”

Oversight Mechanisms maintain human agency through graduated intervention protocols, creating what we term “graceful degradation of autonomy.”

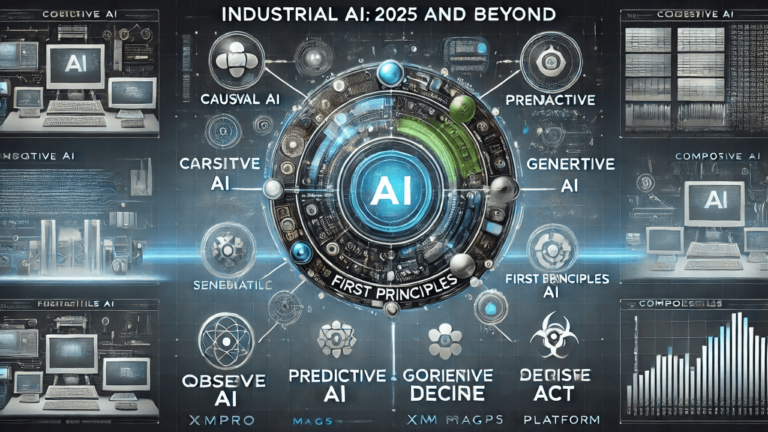

Looking Forward

The future of AI agents lies not in unbounded autonomy but in what I call “structured independence”—the freedom to operate efficiently within well-defined constraints. This approach:

- Addresses the paper’s legitimate safety concerns

- Maintains operational efficiency

- Preserves human agency

- Enables scalable deployment

The key insight is that true safety emerges not from the absence of autonomy but from its careful bounds.

Final Observation

While I agree with many of the paper’s concerns, I believe the solution lies not in prohibition but in principled constraint. By implementing bounded autonomy through computational policies, deontic principles, and expert system rules—all while maintaining meaningful human oversight—we can create AI agents that are both powerful and trustworthy.

The challenge before us is not whether to develop autonomous agents, but how to develop them responsibly. At XMPro, we’re committed to showing that this is not just possible but essential for the future of industrial AI.

Pieter van Schalkwyk is the CEO of XMPro, a pioneer in industrial AI agent management and governance. With over three decades of experience in digital transformation and industrial automation, he leads XMPro’s development of safe and scalable AI agent architectures.

About XMPro: We help industrial companies automate complex operational decisions. Our cognitive agents learn from your experts and keep improving, ensuring consistent operations even as your workforce changes.

Our GitHub Repo has more technical information if you are interested. You can also contact myself or Gavin Green for more information.

Read more on MAGS at The Digital Engineer